Part 2: Inside the A19 and A19 Pro SoCs – Now with “Tensor” cores

In Part 1, we covered the sweeping hardware upgrades of the iPhone 17 lineup and how Apple has standardized so many premium features across the board. Today, we turn our attention to the heart of these devices: the new A19 and A19 Pro SoCs. These chips don’t just power the iPhone 17 series—they set the stage for the upcoming M5 Macs and Apple’s broader AI ambitions.

Architecture Overview

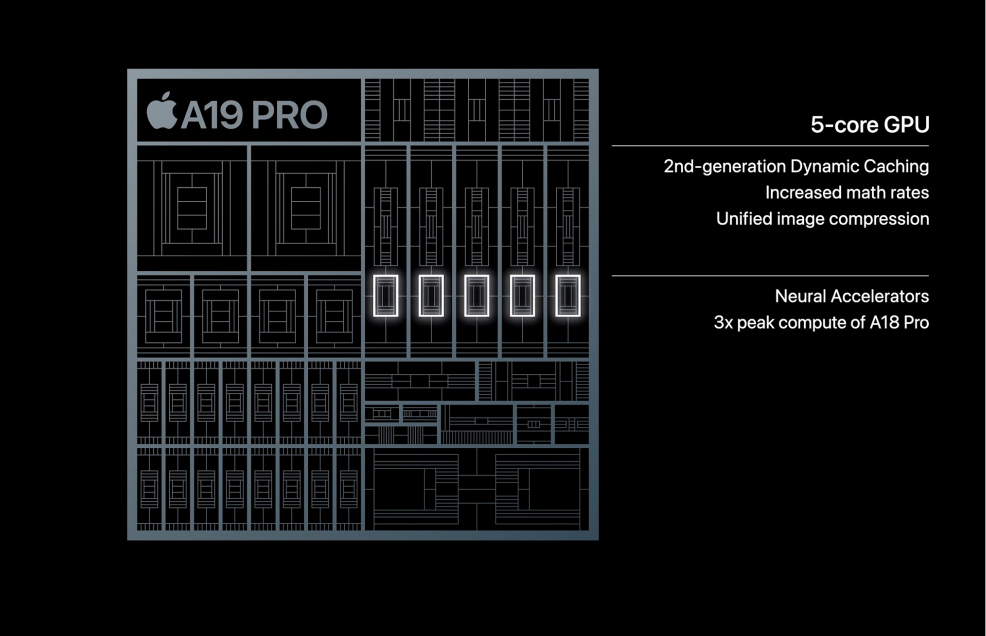

The A19 family is built on TSMC’s third-generation 3nm process (N3P), delivering higher density and efficiency. Apple again segments the lineup with different silicon bins:

– A19: 6-core CPU and 5-core GPU.

– A19 Pro (Binned): 6-core CPU and 5-core GPU, used in the Air.

– A19 Pro (Full): 6-core CPU and 6-core GPU, used in the Pro and Pro Max.

All variants feature a 6-core CPU design with two high-performance and four efficiency cores. Apple has expanded the last-level cache by 50% and improved front-end bandwidth and branch prediction, boosting general-purpose processing.

Performance Improvements

Apple’s official claims:

– +20% CPU performance versus the iPhone 15 Pro Max.

– +50% GPU performance versus the same device.

– Sustained performance: up to 40% better than the A18 Pro, thanks to new thermals.

– Battery life: +4 hours on iPhone 17 Pro and +10 hours on Pro Max.

Some analysts and press briefings suggest even larger “3–4× GPU compute gains” for certain workloads and “MacBook Pro levels of compute.” These claims may be workload-specific and are best viewed as early speculation until benchmarks confirm them.

Thermals and Efficiency

The Pro and Pro Max introduce a vapor-chamber cooling system laser-welded into the new aluminum frame. This isn’t a cosmetic change—it’s a direct response to the higher sustained power demands of the A19 Pro’s GPU. Apple’s return to aluminum over titanium signals a shift to prioritizing thermal conductivity over marketing material choices. For AI-intensive and graphics-heavy workloads, this change allows the chip to maintain peak clocks without throttling.

GPU Evolution: Neural Accelerators in Every Core

The star of the A19 Pro is the redesigned GPU. Each GPU core now integrates a neural accelerator specialized for high-throughput matrix multiplication (matmul).

– Matrix Math Units: These accelerators function like NVIDIA Tensor Cores, optimized for mixed-precision FP16/FP32 matmul operations central to AI models.

– Unified Memory Advantage: Placing accelerators directly in the GPU lets them tap into Apple’s unified memory bandwidth—critical for running large models without bottlenecks.

– Inference First: Apple explicitly positions them for running large language models and generative AI locally. Their biggest impact will be reducing “time to first token” in prompt-heavy models like Transformers.

Some observers speculate about limited on-device personalization (e.g., LoRA adapters), but full training remains infeasible on iPhones given RAM constraints (8–12 GB).

Neural Engine and Heterogeneous AI Compute

The A19 Pro retains a 16-core ANE. Apple now pairs this with GPU neural accelerators, creating a heterogeneous AI system:

– GPU Neural Accelerators: High-bandwidth, FP16-optimized units for large generative models, Transformers, diffusion, and computational photography.

– ANE: Ultra-efficient inference for CNNs, RNNs, and always-on features like Face ID, text recognition, and speech detection.

Frameworks like Core ML and Metal will act as schedulers, dispatching Transformer blocks to the GPU while routing lightweight CNN workloads to the ANE. This division of labor maximizes both performance and battery life.

Connectivity Upgrades

The A19 family integrates the new C1X modem and N1 wireless chip, bringing Wi-Fi 7, Bluetooth 6, and Thread. Pro models add USB 3 wired transfers, accelerating professional workflows like raw photography and log video.

Implications for M5 Macs

Apple’s pattern is consistent: A-series innovations become the blueprint for M-series chips. The A19 Pro’s GPU neural accelerators will almost certainly appear in the M5 family, scaled up with dozens of cores and massive unified memory pools. This would solve a key bottleneck for Macs: slow Transformer prompt processing. A future M5 Ultra with matmul accelerators and up to 192 GB unified memory could deliver workstation-class AI capabilities on the desktop—far beyond VRAM-limited discrete GPUs.

Closing Thoughts

The A19 Pro marks a paradigm shift in Apple’s silicon. By embedding matmul accelerators into the GPU, Apple has built a heterogeneous AI platform that pairs the ANE’s efficiency with GPU-class throughput. This architecture is explicitly aimed at inference—especially large-scale generative AI models—and sets the stage for Macs as personal AI workstations.

This year isn’t just about faster iPhones. It’s about Apple re-engineering its silicon around the future of on-device AI (now in our Part 3).